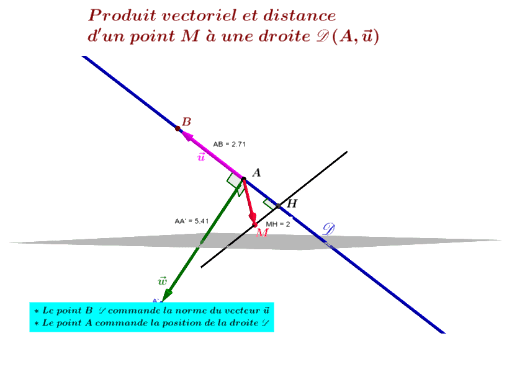

We first select a hyperplane (decision boundary) and then extend two parallel hyperplanes on either side of our decision boundary. A solution to this problem of finding an ‘optimal’ separating hyperplane is to introduce the concept of a margin. Points above the line are classified as +1 and points below the line are classified as -1. Below is an example of two candidate hyperplanes for our data sample.īoth H1 and H2 separate the data without making any classification errors. Unfortunately there are many ways in which we can place a hyperplane to divide the data. All points on one side of the plane will belong to class C1 and all points on the other side of the plane will belong to the second class C2. This hyperplane will be our decision boundary.

What a linear classifier attempts to accomplish is to split the feature space into two half spaces by placing a hyperplane between the data points. In the plot I have + for +1 and – for -1. Each data point is labeled as a +1 or a -1.

In general we will have N observations and P will denote the number of features. Our features space will consist of 12 observations of x1 and x2 pairs. We will work with a binary classification problem. All the points discussed apply equally in higher dimensions. In this post I will work with an example in two dimensions so that we can easily visualize the geometry of our classifier. I find that excel is a great pedagogical tool and hope you agree with me and find the toy model interesting. I borrow heavily from the authors that I will mention at the end of the post so to add some original content I implement SVM in an excel spreadsheet using Solver. In a final post I will discuss what is commonly referred to as the kernel trick to handle non linear decision boundaries. In a second post I will discuss a soft margin SVM.

#HYPERPLAN VECTORIEL EQUATION SERIES#

This will be a three part series with today’s post covering the linearly separable hard margin formulation of SVM. I decided to check out some other online content on SVM and have a stab at presenting the material in a style that is friendly to newbies. I hate to be critical of these two courses since overall they are amazing and I am very grateful to the instructors for making this content available for the masses. Statistical Learning by Trevor Hastie and Rob Tibshirani was an amazing course but unfortunately SVM was treated somewhat superficially. Andrew Ng watered down the presentation of SVM classifiers compared to his Stanford class notes but he still treated the subject much better than his competitors. Both courses miss the mark when it comes to SVM in my opinion. I should mention that there are two exceptions, Andrew Ng’s Machine Learning on Coursera and Statistical Learning course on Stanford’s Lagunita platform. It’s a shame really since other popular classification algorithms are covered. The one weakness so far is the treatment of support vector machines (SVM). These are couple of examples that I ran SVM (written from scratch) over different data sets.I have been on a machine learning MOOCS binge in the last year. Margin is the distance between the left hyperplane and right hyperplane. We would like to learn the weights that maximize the margin. And these points are called support vectors. Motivation: Maximize margin: we want to find the classifier whose decision boundary is furthest away from any data point.We can express the separating hyper-plane in terms of the data points that are closest to the boundary. How is SVM’s hyperplane different from linear classifiers? The answer is “ a line in more that 3 dimensions” ( in 1-D it’s called a point, in 2-D it’s called a line, in 3-D it’s called a plane, more than 3 - Hyperplane). This is a high level view of what SVM does, The yellow dashed line is the line which separates the data (we call this line ‘ Decision Boundary’ (Hyperplane) in SVM), The other two lines (also Hyperplanes) help us make the right decision boundary.

0 kommentar(er)

0 kommentar(er)